Agent-User Interaction Protocol (AG-UI) is quickly becoming the standard for connecting agents with user interfaces.

It gives you a clean event stream to keep the UI in sync with what the agent is doing. All the communication is broken into typed events.

I have been digging into the Protocol, especially around those core event types, to understand how everything fits together. Here’s what I picked up and why it matters.

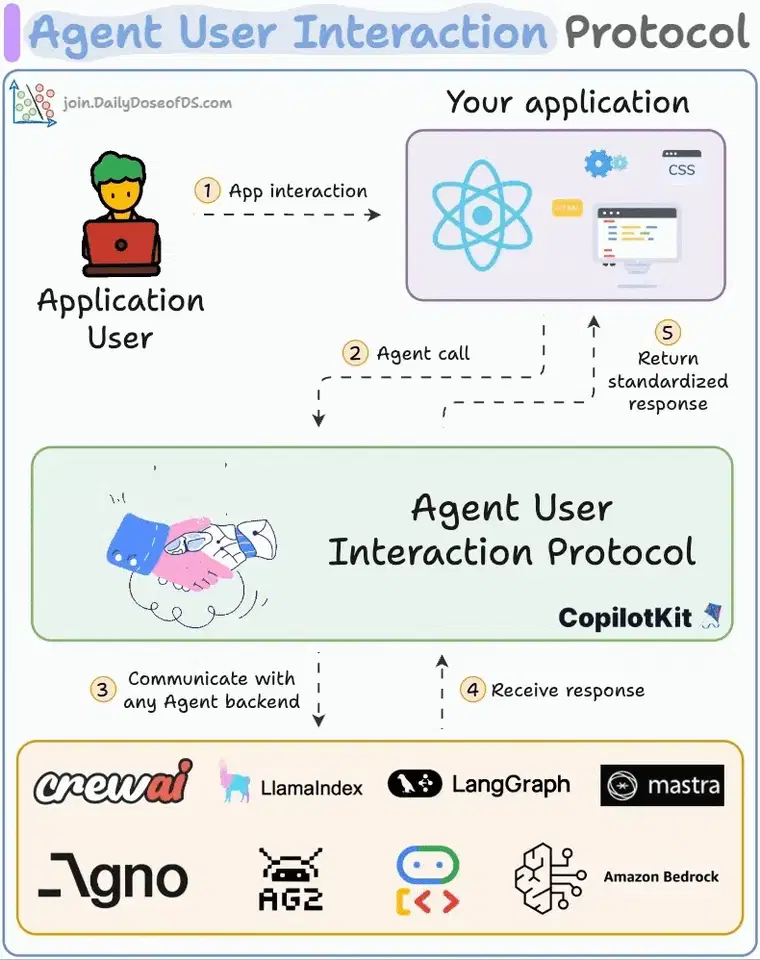

1. The Agentic Protocol (AG-UI)

AG-UI (Agent–User Interaction Protocol) is an open, lightweight, event-based protocol that standardizes real-time communication between AI agents and user-facing applications.

In an agentic app, the frontend (let's suppose a React UI) and the agent backend exchange a stream of JSON events (such as messages, tool calls, state updates, lifecycle signals) over WebSockets, SSE or HTTP.

This lets the UI stay in perfect sync with the agent’s progress, streaming tokens as they are generated, showing tool execution progress and reflecting live state changes.

Instead of custom WebSockets or ad-hoc JSON per agent, AG-UI provides a common vocabulary of events, so any AG-UI compatible agent (such as LangGraph, CrewAI, Mastra, LlamaIndex, Pydantic AI, Agno) can plug into any AG-UI aware frontend without rewriting the integration. Check the list of all supported frameworks.

For example, the diagram below shows how a user action in the UI is sent via AG-UI to any agent backend and responses flow back as standardized events:

Credit: dailydoseofds.com

You can create a new app using the CLI with the following command.

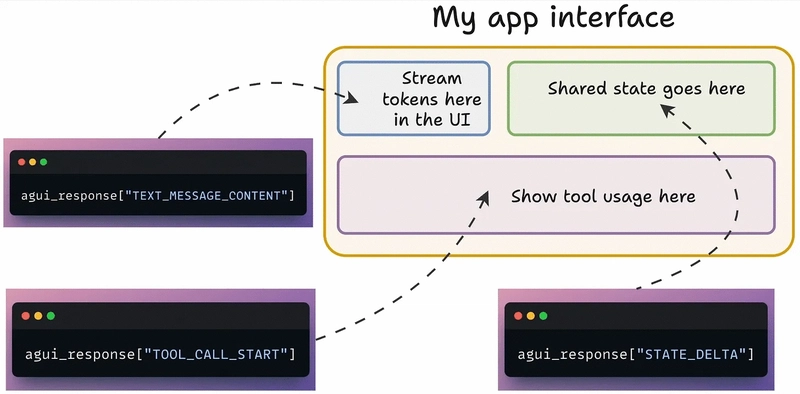

2. What are AG-UI Event Types and why should you care about them?

AG-UI defines 17 core event types (special cases included), covering everything an agent might do during its lifecycle. Think of events as the basic communication units between agents and frontends.

Each event is a JSON object with a type (such as "TextMessageContent", "ToolCallStart") with a payload.

Credit: dailydoseofds.com

Because these events are standard and self-describing, the front-end knows exactly how to interpret them. For example:

TEXT_MESSAGE_CONTENT: event streams LLM tokensTOOL_CALL_START/END: convey function call progressSTATE_DELTA: carries JSON Patch deltas to sync state

Standardizing these -- decouples the UI from the agent logic and vice versa. The UI doesn’t need custom glue code to understand the agent’s behavior.

Any agent backend can emit AG-UI events.

And any AG-UI compliant UI can consume them.

The protocol groups all the events into five high-level categories:

✅ Lifecycle Events : Track the progress of an agent run (start, finish, errors, sub-steps).

✅ Text Message Events : Stream chat or other text content (token by token).

✅ Tool Call Events : Report calls to external tools or APIs and their results.

✅ State Management Events : Synchronize shared application state between agent and UI.

✅ Special Events : Generic passthrough or custom events for advanced use cases.

In the next section, let's learn all about them along with practical examples.

3. Breaking ALL Events with Practical Examples

If you are interested in exploring yourself, read the official docs which includes an overview, lifecycle events, flow patterns and more.

All events inherit from the BaseEvent type, which provides common properties shared across all event types:

type: what kind of event it istimestamp(optional) : when the event was createdrawEvent(optional) : the original event data if the event was transformed

There are other properties (like runId, threadId) that are specific to the event type.

Event Encoding

The Agent User Interaction Protocol uses a streaming approach to send events from agents to clients. The EventEncoder class provides the functionality to encode events into a format that can be sent over HTTP.

We will be using it in the examples in all the event categories so here's a simple example:

Once the encoder is set up, agents can emit events in real time, and frontends can listen and react to them immediately. Read more on the docs.

Let's cover each category in-depth with examples.

✅ Lifecycle Events

Lifecycle events help to monitor the overall run and its sub-steps. They tell the UI when a run starts, progresses, succeeds or fails.

The five lifecycle events are:

1) RunStarted : signals the start of an agent run

2) RunFinished : signals successful completion of a run.

3) RunError : signals a failure during the run.

4) StepStarted (optional) : start of a sub-task within a run.

5) StepFinished : marks the completion of a sub-task.

There could be multiple StepStarted / StepFinished pairs within a single run, representing progress through intermediate sub-tasks.

Example flow:

RunStarted → (StepStarted → StepFinished …) → RunFinished- If something fails, RunError replaces RunFinished.

Here's a simple example of how events are emitted on the agent's side:

Here is a simple example code of a stock analysis Agent (frontend side).

Here the UI would listen for these events to know when to show loading indicators and when to display the final result. If something goes wrong, the agent would emit RunError instead, which the UI can catch to display an error message.

✅ Text Message Events

Text events carry human or assistant messages, typically streaming content token by token. There are three events defined in this category:

1) TEXT_MESSAGE_START : signals the start of a new message. Contains messageId and role (such as “developer”, “system”, “assistant”, “user”, “tool”) as properties.

2) TEXT_MESSAGE_CONTENT : carries a chunk of text (delta) as it’s generated, allowing the UI to display text in real time.

3) TEXT_MESSAGE_END : signals the end of the message.

In non-streaming scenarios, when the entire content is available at once, the agent might use the TextMessageChunk event, which sends complete text messages in a single event instead of the three-event sequence. Read more on docs.

Example flow:

TEXT_MESSAGE_START → (TEXT_MESSAGE_CONTENT → TEXT_MESSAGE_CONTENT ...) → TEXT_MESSAGE_END

Each message is framed by TEXT_MESSAGE_START and TEXT_MESSAGE_END, with one or more TEXT_MESSAGE_CONTENT events in between.

For example, an assistant reply “Hello” might be sent as (agent’s side):

Here's how the UI might handle it:

In summary, Text Message events handle all streaming of textual content between the agent and UI, decoupling chat logic from the transport.

✅ Tool Call Events

These events represent the lifecycle of tool calls made by agents. Tool calls follow a streaming pattern similar to text messages.

1) TOOL_CALL_START : emitted when the agent begins calling a tool. Includes a unique tool_call_id and tool_name.

2) TOOL_CALL_ARGS : optionally emitted if the tool’s arguments are streamed in parts. Each event carries a delta field containing a partial chunk of the argument data (useful for large or dynamically generated inputs).

3) TOOL_CALL_END : marks the completion of the tool call execution.

4) TOOL_CALL_RESULT : carries the final output returned by the tool.

Example flow:TOOL_CALL_START → (TOOL_CALL_ARGS ...) → TOOL_CALL_END → TOOL_CALL_RESULT

Here's how it looks on the agent side (emitting events):

Here’s the example code on the frontend side:

By listening to these events, the UI can show real-time tool progress (such as “Loading data…”) and then display the results (under a “tool” role) when ready.

✅ State Management Events

These events are used to manage and synchronize the agent’s state with the frontend. Instead of re-sending a large data blob each time, the agent follows an efficient snapshot-delta pattern where:

1) StateSnapshot : sends a full JSON snapshot of the current state. Useful for initial sync or occasional full refreshes.

2) StateDelta : sends incremental changes as a JSON Patch diff (RFC6902). Reduces data transfer for frequent updates.

3) MessagesSnapshot (optional) : sends a full conversation history if needed to resync the UI.

Example flow:

StateSnapshot → (StateDelta → StateDelta …) → StateSnapshot → (StateDelta ...)

The agent starts with a StateSnapshot to initialize the frontend, then streams incremental StateDelta events as changes occur. Occasional StateSnapshot events to resync if needed.

Here's how it looks on the agent side (emitting events):

Here’s how to handle those events on the frontend side:

Let's suppose an agent is updating a UI table or a shopping cart: it can add or modify entries via a state delta instead of re-sending the whole table.

By using state events, the UI can merge small updates without restarting from scratch.

✅ Special Events

Special events are “catch-all” events in AG-UI. They are used when an interaction doesn’t fit into the usual categories. These don’t follow the standard lifecycle or streaming patterns of other event types.

In simple terms: If you need the agent and frontend to do something unique or custom that the standard events don’t cover, you use special events.

1) RawEvent :

- Used to pass through events from external systems.

- Acts as a container for events originating outside AG-UI, preserving the original data.

- The optional

sourceproperty can identify the external system. - Frontends can handle these events directly or delegate them to system-specific handlers.

- Properties:

eventcontains the original event data &source(optional) identifies the external system.

2) CustomEvent :

- Used for application-specific events not covered by standard types.

- Explicitly part of the protocol (unlike Raw) but fully defined by the app.

- Enables protocol extensions without changing the specification.

- Properties:

nameidentifies the custom event &valuecontains the associated data.

Let's say if you want to implement a multi-agent workflow where control passes from one agent to another, you could define a custom event like:

AG-UI doesn’t inherently know what “handoff” means, it’s up to your application code to enforce it. So Custom enables this pattern, but it’s entirely app-defined.

Example flow:

RawEvent → CustomEvent → RawEvent → CustomEvent …

These events don’t have a fixed order: they appear as needed in the event stream, depending on external triggers and app-specific logic.

Here's a simple example on the agent side:

Here's how it is handled on the frontend side:

In short, special events provide an extension point when you need “something extra” beyond the core AG-UI schema.

✅ Draft Events

There are more events that are currently in draft status and may change before finalization. Here are some of those types:

Activity Events: will represent agent progress between messages, letting the UI show fine-grained updates in order.Reasoning Events: will support LLM reasoning visibility and continuity, enabling chain-of-thought reasoning.Meta Events: will provide annotations or signals independent of agent runs, like user feedback or external events.Modified Lifecycle Events: will extend existing lifecycle events to handle interrupts or branching.

Check the complete list on the official docs.

In the next section, you will find a live interaction flow to understand how all the events come together to work in practice.

Live Interaction Flow (showing even stream)

Here’s a live example combining multiple event types to illustrate the full lifecycle of an agent interaction. You can try it out at .

https://www.copilotkit.ai/blog/introducing-the-ag-ui-dojo

Here's the complete event sequence from the LangGraph AG-UI demo showing a stock analysis agent:

Once you get the hang of AG-UI events, you realize how much simpler and more predictable interactive agents become.

It’s one of those specs that quietly solves a big problem for anyone building serious agent apps.

I hope you learned something valuable. Have a great day!

Get notified of the latest news and updates.

.png)

%20Using%20A2A%20%2B%20AG-UI%20(8).png)

.png)