Over 18 months ago, CopilotKit released its first version with one clear goal: give developers the tools to build intelligent, AI-native interfaces - not just bots with text boxes, but deeply integrated, usable, agentic UIs.

At the time, we were making two bets:

- Agents are not going to lead to full automation anytime soon for most use cases.

- Humans would need to remain deeply in the loop, and therefore, good tooling was needed to facilitate the interaction between agents & users.

- Agents need good UI, and it is difficult to build from scratch.

- Agents & LLMs need to be embedded natively in your application as first class citizens. Good building blocks are needed to facilitate this in a clean & robust way.

That bet is now playing out, and over the last few months, we’ve seen a wave of attention around agentic UI.

First, with our own AG-UI protocol, which we released in May, and which has seen insane growth in usage (installs) & adoption across the Agent Framework ecosystem

(LangGraph, CrewAI, Microsoft Agent Framework, Google’s ADK, PydanticAI, Mastra, and more).

Then, with MCP-UI, which has now been adopted by Anthropic & OpenAI to power MCP apps

And with A2UI, which is a generative UI spec that lives on top of A2A.

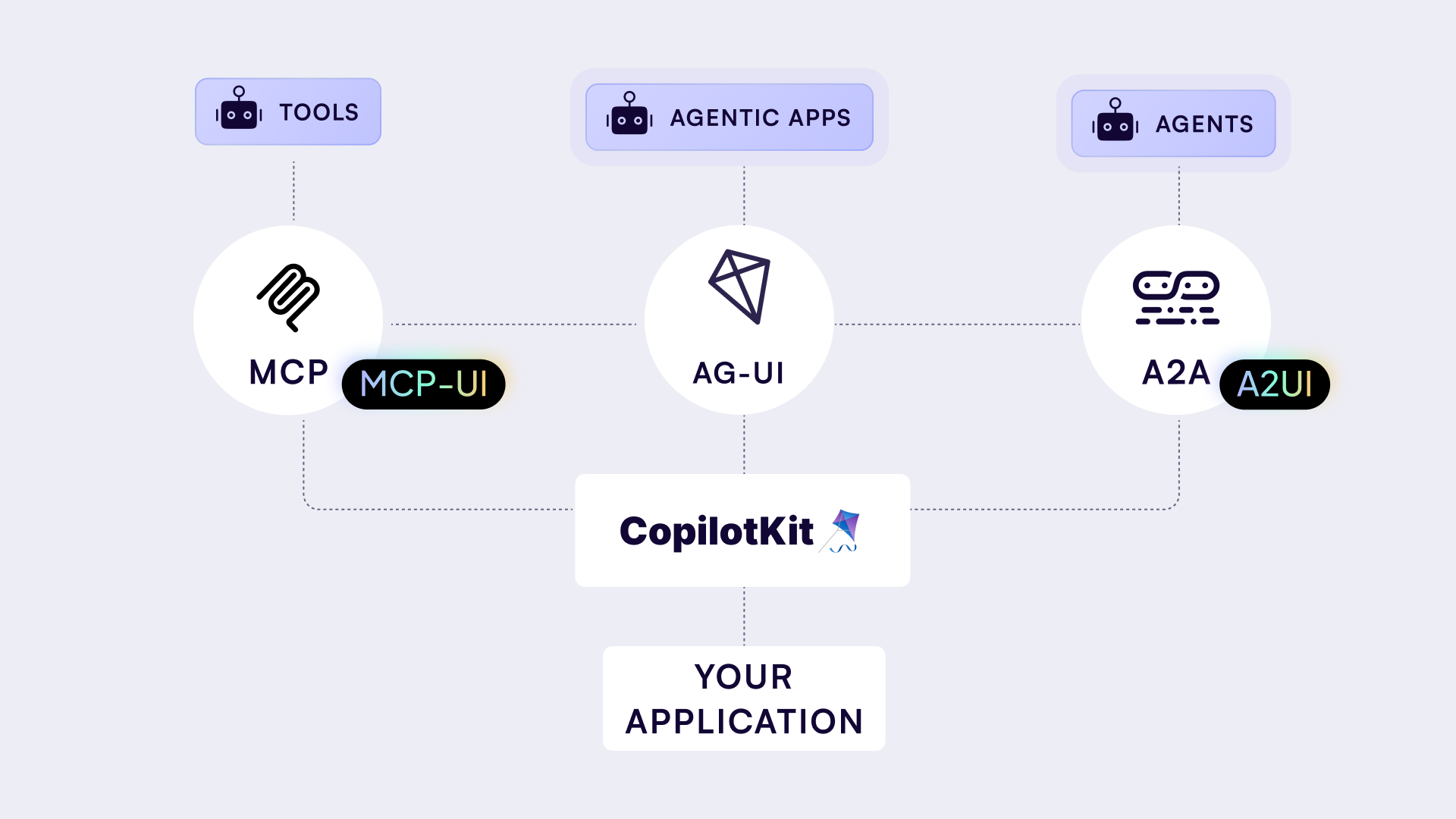

It is great to see the space converge around the clear need for great Agentic UI, and to see it offer a spectrum of solutions. Each powering a different type of Agentic& Generative UI.

CopilotKit & AG-UI work well together with both MCP-UI & A2UI, and we are in close collaboration with those teams on handshakes that make them work seamlessly together.

Let me explain the different types of Generative UI & how they all fit together:

What Is Agentic UI?

_resized.png)

Agentic UI refers to user interfaces where AI agents can drive, update, and respond to real-time UI elements, not just output text. Think beyond “chat.” A truly agentic UI is one where:

- The LLM doesn’t just describe a form, it fills it out.

- The UI isn’t just static, it updates live as the agent reasons.

- The user isn’t limited to typing - they click, select, and interact.

Agentic UIs are about moving LLMs from pure language interfaces to interactive, visual, and structured user experiences - while still leveraging the intelligence of the model.

Why Does It Matter?

1. Better UX

Nobody wants to chat with a spreadsheet. Sometimes, the right UX is a chart, not a paragraph. Generative UI makes AI apps faster and more usable.

2. Safer and More Controlled

With structured UIs and typed actions, developers can define the surface area of what an AI can do, and users get clarity on what’s happening.

3. Scalable Patterns

Prompt engineering can only get you so far. Agentic UIs let us move from brittle prompt hacks to declarative interfaces and reusable components.

Three Types of Generative UI

We usually see generative UIs fall into three buckets:

🧱 1. Static / Developer-Defined UI

You write and control the UI. The AI fills in data or triggers actions — but the UI itself is hardcoded. Example: CopilotTextarea, where you own the text box, and the AI simply augments it.

_resized%20(1).png)

🧩 2. Declarative UI

The agent outputs a structured description of UI (e.g., JSON schema), and the app renders components based on that. More flexible than static UI, but safer than raw HTML. This is where A2UI and OpenUI specs come in.

_resized.png)

🧪 3. Fully-Generated UI

The LLM outputs raw HTML/CSS or JSX. Wildly flexible — but risky, hard to control, and often not production-grade. Sometimes useful for prototyping or internal tools.

_resized.png)

The Protocols Behind Agentic UI

🔌 AG-UI: The Bridge Between Agents<>Users

AG-UI (Agent ↔ UI) is our open protocol for real-time, bi-directional communication between agents and your frontend. It handles streaming responses, event callbacks, multi-agent coordination, shared state, and more.

AG-UI doesn’t dictate what the UI looks like — it just makes sure your agent and your UI can talk in real time. It supports typed actions, declarative UI specs, and even streaming partial updates.

Think of it as the WebSocket of agentic apps — not the content, but the channel.

🧰 MCP-UI and MCP Apps: Rich UIs in Standard Agents

MCP-UI (and now MCP Apps) is an extension to the Model Context Protocol (MCP), championed by OpenAI and Anthropic. It allows agents to serve rich UIs — like charts, maps, forms — as part of tool calls.

These UIs are typically pre-built HTML templates served in sandboxed iframes. Tools can reference them with a ui://URI and communicate back to the agent using the MCP channel.

MCP Apps are powerful: they enable full interactive components inside a chat UI. But they rely on HTML+JS and may require more orchestration from host apps.

🧾 A2UI: Declarative UI from Agents

A2UI is an upcoming open standard from Google that lets agents return structured, composable UI elements — like cards, tables, and buttons — in a declarative format.

It’s not about delivering HTML — it’s about describing UI as data, so the host app can render it natively and safely. A2UI fits naturally with AG-UI: the agent emits A2UI, AG-UI delivers it to the app, and the UI is rendered locally.

We’re excited for A2UI. It aligns closely with the declarative UI approach we’ve supported since the beginning.

How They Work Together

These protocols aren’t competing — they’re layers in the stack:

- AG-UI is the runtime channel: the agent ↔ UI bridge.

- MCP is the agent ↔ tool protocol: the backend action layer.

- A2UI and MCP-UI define the UI payload: what UI gets rendered.

At CopilotKit, we think the future is composable: an agent might use MCP to call tools, A2UI to describe UI, and AG-UI to push updates to the frontend.

We’re building toward a world where agents are first-class UI citizens — able to trigger structured components, stream updates, and share state with your app.

CopilotKit: The Agentic UI SDK

At CopilotKit, our goal is to make this ecosystem usable.

We provide:

- SDKs and hooks for React/Angular apps

- Pre-built components for common agent patterns

- AG-UI protocol support for agent ↔ UI sync

- Typed actions and shared state between your app and your copilot

- Support for MCP, A2UI, and custom UI formats

We’re not just a protocol — we’re the toolkit for building real, production-grade agentic apps today.

Get Involved

If you’re building LLM apps and want a powerful, interactive UX, you should be looking at Agentic UI.

Check out copilotkit.ai, explore our GitHub, or jump into our Discord. Let’s build the future of AI-native software together.

Get notified of the latest news and updates.

.png)

%20Using%20A2A%20%2B%20AG-UI%20(8).png)

.png)